1. Advanced Deep Learning Architectures for Time Series

1.1 Temporal Graph Neural Networks (TGNN)

TGNNs extend traditional GNNs to handle dynamic graph structures in time series data:

- Application in financial markets: Modeling the evolving relationships between assets over time.

- Social network analysis: Capturing the dynamics of information diffusion and influence propagation.

- Traffic flow prediction: Representing road networks as dynamic graphs for more accurate forecasting.

Technical detail: TGNNs typically employ a combination of graph convolution operations and recurrent neural networks. For instance, a TGNN might use a graph attention network (GAT) layer to capture spatial dependencies, followed by a gated recurrent unit (GRU) to model temporal evolution. The key innovation lies in how these networks update node embeddings over time, often using techniques like time-encoding or temporal attention mechanisms.

Research direction: Developing efficient training algorithms for large-scale temporal graphs and exploring interpretable TGNN architectures.

1.2 Neural Architecture Search (NAS) for Time Series

Automated discovery of optimal neural network architectures for specific time series tasks:

- MetaTS: A meta-learning approach for time series forecasting that adapts model architectures to different datasets.

- Efficient NAS: Developing resource-aware NAS algorithms suitable for edge devices and IoT applications.

Technical detail: NAS for time series often employs a search space that includes various types of layers (e.g., convolutional, recurrent, attention-based) and connection patterns. The search strategy might use reinforcement learning, where an agent learns to design architectures, or gradient-based approaches like DARTS (Differentiable Architecture Search). The optimization objective typically balances prediction accuracy with computational efficiency.

Future trend: Integrating domain knowledge into the NAS process to guide the search towards more meaningful architectures.

1.3 Transformer Variants for Long Sequences

Addressing the limitations of standard Transformers in processing long time series:

- Informer: Utilizing a ProbSparse self-attention mechanism to efficiently handle long sequence time series forecasting.

- Autoformer: Incorporating a decomposition architecture into the Transformer for improved long-term forecasting.

Technical detail: The Informer model introduces a ProbSparse self-attention mechanism that samples only the most important key-value pairs, reducing the time complexity from O(L^2) to O(L log L), where L is the sequence length. Autoformer, on the other hand, employs a series-wise connection and auto-correlation mechanisms to capture long-range dependencies more effectively.

Ongoing research: Developing attention mechanisms that can capture multi-scale temporal dependencies more effectively.

2. AI-Driven Time Series Preprocessing and Feature Engineering

2.1 Automated Data Cleaning and Imputation

AI systems for handling missing data and anomalies in time series:

- Deep learning-based imputation: Using models like bidirectional RNNs or VAEs for missing value estimation.

- Anomaly detection and correction: Employing autoencoders or GANs to identify and rectify anomalous data points.

Future direction: Developing end-to-end systems that seamlessly integrate data cleaning, imputation, and downstream analysis.

2.2 AI-Powered Feature Extraction

Automated discovery of relevant features from raw time series data:

- Symbolic representations: Using AI to learn optimal symbolic representations of time series (e.g., advanced SAX-like algorithms).

- Deep feature synthesis: Adapting techniques like those used in automated machine learning for time series-specific feature generation.

Research focus: Creating interpretable feature extraction methods that can provide insights into the underlying processes generating the time series.

3. AI in Multivariate and High-Dimensional Time Series Analysis

3.1 Dimension Reduction and Representation Learning

AI techniques for handling high-dimensional time series data:

- Variational Autoencoders (VAEs) for Time Series: Learning compact, meaningful representations of complex multivariate time series.

- Dynamic Mode Decomposition with Deep Learning: Combining classical dynamical systems theory with modern AI for dimensionality reduction.

Emerging trend: Developing methods that can preserve both temporal dynamics and inter-variable relationships in the reduced space.

3.2 Causal Structure Learning in Multivariate Time Series

AI approaches to uncover causal relationships in complex systems:

- Neural Granger Causality: Extending traditional Granger causality using neural networks to capture nonlinear causal relationships.

- Causal Discovery with Reinforcement Learning: Using RL agents to explore and identify causal structures in multivariate time series.

Technical detail: Neural Granger Causality typically employs a network architecture where each variable is predicted using the past values of all variables. The network is trained with sparsity-inducing regularization (e.g., group lasso) to identify relevant causal connections. In contrast, RL-based causal discovery formulates the problem as a Markov Decision Process, where the agent’s actions involve adding or removing edges in a causal graph, with rewards based on the resulting model’s predictive performance and complexity.

Future direction: Integrating causal discovery with predictive modeling for more robust and interpretable forecasting systems.

4. AI-Enhanced Time Series Forecasting

4.1 Hybrid Models: Combining Statistical Methods with Deep Learning

Leveraging the strengths of both traditional statistical models and modern AI:

- DeepAR+: Enhancing Amazon’s DeepAR with statistical components for improved uncertainty quantification.

- Neural Prophet: Integrating Facebook’s Prophet model with neural networks for flexibility and interpretability.

Research opportunity: Developing theoretically grounded approaches for combining statistical and deep learning models optimally.

4.2 Multi-Horizon and Probabilistic Forecasting

Advanced AI techniques for comprehensive future predictions:

- Seq2Seq models with Temporal Attention: Improving long-term forecasting accuracy by focusing on relevant historical patterns.

- Normalizing Flows for Time Series: Generating full predictive distributions rather than point estimates.

Ongoing challenge: Balancing computational efficiency with the need for accurate long-term, probabilistic forecasts.

5. AI in Specialized Time Series Domains

5.1 AI for Continuous-Time Series Analysis

Adapting AI methods to handle irregularly sampled or continuous-time data:

- Neural ODEs for Irregular Time Series: Modeling the underlying continuous dynamics of sporadically observed processes.

- Attention-based Continuous-Time Models: Developing attention mechanisms that can handle arbitrary time intervals between observations.

Technical detail: Neural ODEs represent the dynamics of a system using a neural network that defines the derivative of the hidden state. The evolution of the system is then computed by solving this ODE using numerical methods. For time series, this allows for natural handling of irregular sampling by evaluating the ODE at arbitrary time points. The network is trained end-to-end using the adjoint method, which efficiently computes gradients through the ODE solver.

Future trend: Integrating physical constraints and domain knowledge into continuous-time neural network models.

5.2 AI in Spatio-Temporal Analysis

Advanced techniques for analyzing data with both spatial and temporal components:

- ConvLSTM and variants: Capturing spatio-temporal dependencies in climate data, video sequences, etc.

- Graph Neural ODEs: Modeling the evolution of spatial relationships over time in complex systems.

Research direction: Developing scalable methods for global-scale spatio-temporal forecasting, crucial for climate modeling and geospatial analytics.

6. Explainable AI for Time Series

6.1 Interpretable Deep Learning for Time Series

Making complex AI models more understandable in the context of time series:

- LIME and SHAP adaptations for time series: Explaining individual predictions in time series forecasting.

- Attention Visualization Techniques: Developing intuitive ways to visualize what temporal patterns the model focuses on.

Ongoing challenge: Creating explanation methods that can handle the temporal dependencies and varying time scales in time series data.

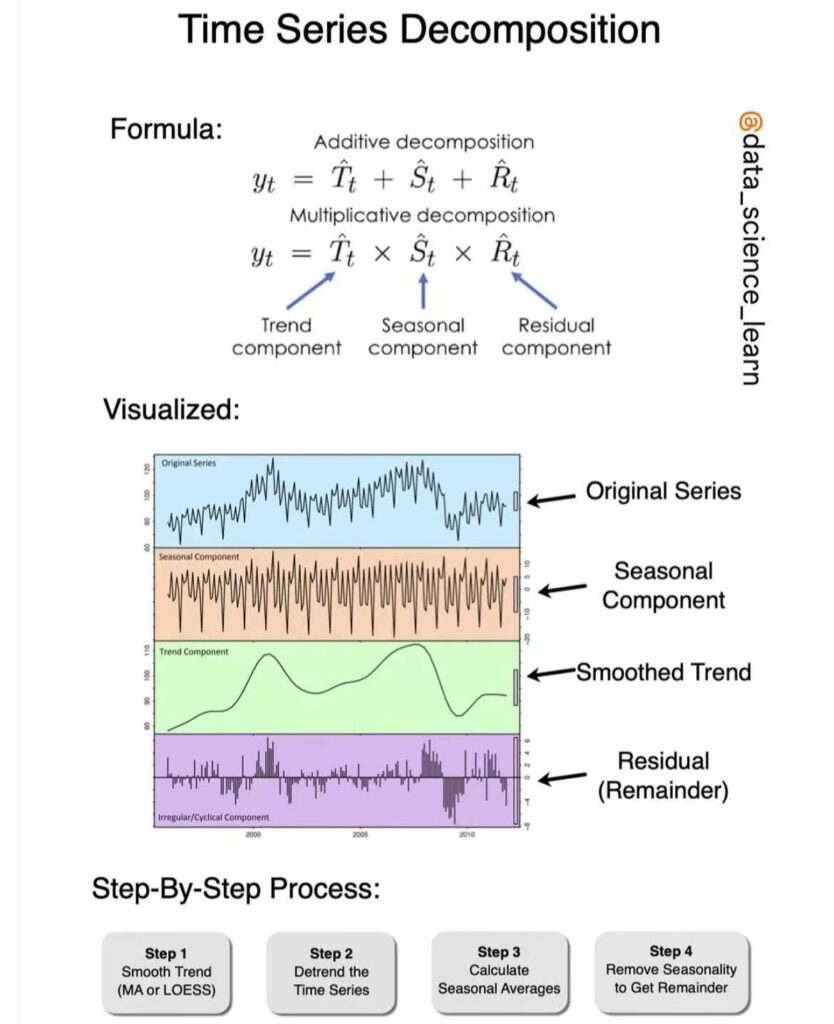

6.2 AI-Assisted Time Series Decomposition

Using AI to provide meaningful decompositions of complex time series:

- Neural Decomposition: Learning data-driven, interpretable components (trend, seasonality, etc.) using structured neural networks.

- Symbolic Regression with Genetic Algorithms: Discovering interpretable mathematical expressions that describe time series components.

Future direction: Integrating domain-specific knowledge to guide the decomposition process towards more meaningful and actionable insights.

7. AI for Real-Time and Streaming Time Series Analysis

7.1 Online Learning for Time Series

Adapting AI models to learn continuously from streaming data:

- Incremental Learning Algorithms: Developing neural network architectures that can update efficiently with new data without forgetting past patterns.

- Concept Drift Detection and Adaptation: AI systems that can automatically detect changes in the underlying data distribution and adapt accordingly.

Research focus: Creating robust online learning systems that can handle non-stationary time series common in real-world applications.

7.2 Edge AI for Time Series Processing

Bringing AI capabilities to edge devices for real-time time series analysis:

- Model Compression Techniques: Adapting methods like quantization and pruning specifically for time series models.

- Federated Learning for Distributed Time Series: Enabling collaborative learning across multiple edge devices while preserving data privacy.

Emerging trend: Developing hardware-aware AI models that can optimize their architecture based on the computational constraints of the edge device.

8. Interdisciplinary Applications and Perspectives

8.1 Computational Biology and Bioinformatics

AI-driven time series analysis is revolutionizing our understanding of biological systems:

- Gene Expression Dynamics: Using deep learning models to analyze time-course gene expression data, uncovering complex regulatory networks and cellular responses to stimuli.

- Protein Folding Kinetics: Applying recurrent neural networks and attention mechanisms to model the time-dependent process of protein folding, complementing traditional molecular dynamics simulations.

- Circadian Rhythm Analysis: Employing periodic and quasi-periodic time series models to study biological clock mechanisms across different scales, from molecular to behavioral levels.

Research direction: Developing AI models that can integrate multi-omics time series data (genomics, proteomics, metabolomics) for a systems-level understanding of biological processes.

8.2 Neuroscience and Brain-Computer Interfaces

Time series analysis is crucial in decoding neural signals and understanding brain function:

- EEG/MEG Signal Processing: Using advanced deep learning architectures to analyze high-dimensional, noisy electrophysiological data for brain state classification and anomaly detection (e.g., seizure prediction).

- fMRI Time Series Analysis: Applying graph neural networks and dynamic causal modeling to understand functional connectivity patterns in the brain over time.

- Neural Decoding for BCIs: Developing real-time, adaptive algorithms that can translate neural time series data into control signals for prosthetic devices or communication systems.

Frontier research: Integrating multiple timescales of neural activity (from milliseconds to days) in AI models to capture both rapid neural dynamics and slower plasticity processes.

8.3 Earth Sciences and Climate Modeling

AI is enhancing our ability to analyze and predict complex earth system dynamics:

- Multi-scale Climate Modeling: Using hierarchical neural networks to bridge global and regional climate models, capturing interactions across different spatial and temporal scales.

- Extreme Event Prediction: Developing hybrid models that combine physical understanding with data-driven approaches to improve the prediction of rare but high-impact climate events.

- Earth System Data Assimilation: Employing AI techniques to efficiently integrate diverse observational data into earth system models, improving initial conditions for forecasts.

Challenge: Creating AI models that respect physical conservation laws and can extrapolate reliably beyond observed conditions, crucial for long-term climate projections.

8.4 Econometrics and Quantitative Finance

AI is transforming traditional time series methods in economics and finance:

- High-Frequency Trading Algorithms: Developing ultra-low latency neural networks capable of making trading decisions in microseconds based on real-time market data streams.

- Macroeconomic Forecasting: Using ensemble methods and causal AI to integrate diverse economic indicators and improve long-term economic predictions.

- Sentiment Analysis for Market Prediction: Combining NLP techniques with time series models to analyze news feeds, social media, and other textual data for market trend prediction.

Research opportunity: Developing AI models that can capture and adapt to regime changes in economic systems, a longstanding challenge in econometrics.

9. Future Directions and Challenges

- Transfer Learning in Time Series: Developing methods to effectively transfer knowledge between different time series domains and tasks.

- Few-Shot Learning for Time Series: Creating AI models that can adapt to new time series tasks with minimal training data.

- Quantum Machine Learning for Time Series: Exploring how quantum algorithms can be applied to time series problems for potential speedups in processing and analysis.

- AI for Multimodal Time Series: Integrating time series data with other modalities (text, images, etc.) for more comprehensive analysis and forecasting.

- Ethical AI in Time Series Analysis: Addressing issues of fairness, bias, and privacy in AI-driven time series applications, especially in sensitive domains like healthcare and finance.

- Neurosymbolic AI for Time Series: Combining neural networks with symbolic AI to incorporate domain knowledge and improve generalization in time series modeling.

- Continual Learning in Non-Stationary Environments: Developing AI systems that can continuously adapt to changing patterns in time series data without catastrophic forgetting.

As AI continues to evolve, its integration with time series analysis promises to unlock new capabilities in forecasting, anomaly detection, and complex system modeling. The challenge lies in developing AI systems that are not only powerful and accurate but also interpretable, efficient, and adaptable to the diverse and dynamic nature of real-world time series data. The interdisciplinary nature of these advancements highlights the potential for cross-pollination of ideas between different fields, potentially leading to breakthrough innovations in how we understand and predict temporal phenomena across various domains.